More A/B Tests Won't Save You

The best testing programs we've seen aren't the ones that run the most tests. They're the ones where every test has a reason, every result gets analyzed with intellectual honesty, and the people doing the work are trusted to do it well. Volume is a byproduct of maturity, not a substitute for it.

Under the Hood at 33 Sticks: What Four Months Taught Me About Data, Strategy, and What Really Matters

I was hired without a CV. I learned that 2-hour meetings are a scam. And I discovered that being called 'difficult' often just means refusing to shrink into systems that weren't built for real innovation. A reflection on four months that permanently raised my standards for how I want to work.

Data Theater: What McKinsey's AI Report Actually Says (And What LinkedIn Gurus Won't Tell You)

McKinsey's latest AI research is everywhere on LinkedIn: "57% of jobs automated! $2.9 trillion opportunity!" But the viral posts are cherry-picking stats and stripping context. We read the actual 60-page report. Here's what decision-makers need to know instead.

The New Compliance Challenge Hiding in Your Optimization Stack

New York became the first state to require businesses to disclose when algorithms use personal data to set prices. The law took effect November 10, 2025, survived a constitutional challenge, and is being actively enforced. California is taking a different approach through antitrust law. Multiple other states have pending legislation. This is following the exact same trajectory as GDPR → state privacy laws, creating a compliance patchwork with no federal preemption in sight.

We Asked 3 LLMs to Build a Data Viz. Here's What Happened.

If you've been on LinkedIn lately, you've probably seen your fair share of posts about how "AI will replace your analysts!"

"ChatGPT just made my whole data team obsolete!"

We got curious. Not about whether AI can theoretically do analysis, we know it can be a very useful tool, but whether it can deliver an actual work product. The kind of thing a marketing director might hand to their exec team. So we ran a simple test.

How to spot when someone cherry-picks data to tell you what they want you to believe.

We seen it used on several Subreddits, it made rounds on Facebook, and of course it has been shared ad nauseam by LinkedIn thought-leaders. But do the charts, as one poster on LinkedIn put it, make it obvious that we are not in a bubble?

The charts seems legit, although they have been so overshared at this point the pixel quality has clearly degraded. The numbers seem authoritative. The argument feels data-driven.

But is it obvious?

Let's break down exactly what's going on here, and more importantly, how you can spot similar red flags in any analysis you encounter.

The claim.

“If this were a bubble, we'd see massive cash burn and unsustainable growth. But the numbers show real adoption, real revenue, and real productivity. What matters is net profit growth, something that frankly wasn't there at all in the dot com bubble. This is what separates AI from past 'bubbles'."

The Map That Never Dies: Why This Misleading Electoral Visualization Still Works in 2025

The White House shared a viral electoral map that's been debunked for a decade. Here's why geographic county maps systematically misrepresent election results and why they'll never die.

How to Spot Bad Data: A Case Study in Corporate Research Theater

The viral Empower survey about Gen Z salaries? Methodologically worthless. Learn the 7 red flags to spot bad data and protect yourself from misleading research.

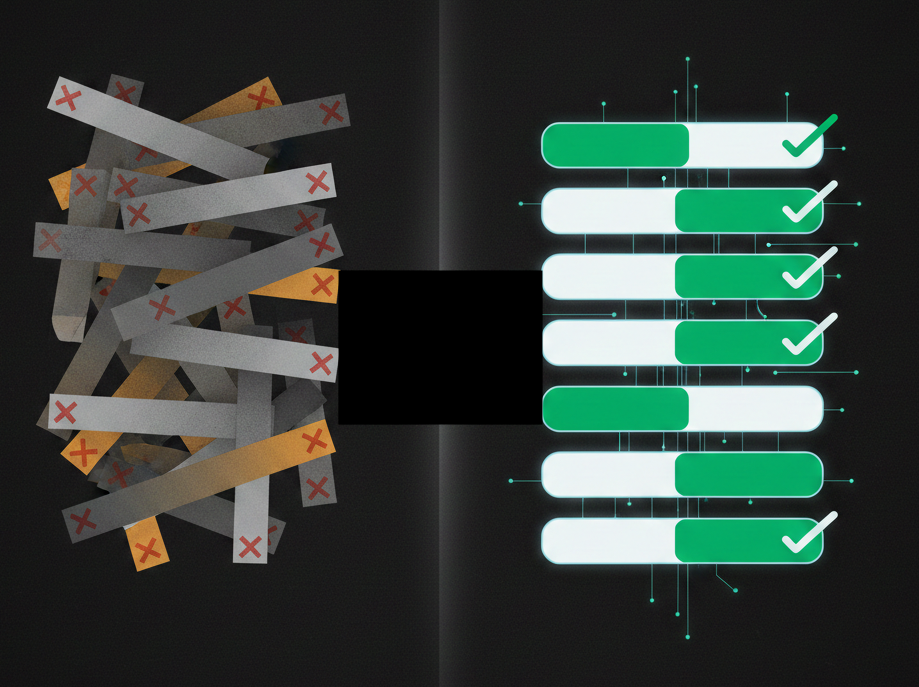

How to Prioritize A/B Tests: A Simple Framework for Choosing What to Run First

Learn how to prioritize A/B tests using the ICE framework. Score tests on impact, confidence, and ease to build a strategic testing roadmap that drives results.

What’s the Minimum Sample Size You Need Before Trusting Your Test Results?

Learn how to calculate the right sample size to make A/B test results trustworthy without wasting time or traffic.

Building Your First Optimizely Opal Custom Tool

Optimizely Opal's Custom Tools feature lets you extend the Opal AI agent's capabilities by connecting it to your own services and APIs. In this guide, we'll walk through creating a simple custom tool from scratch, deploying it to the cloud, and integrating it with Opal.

By the end of this tutorial, you'll have a working custom tool that responds to natural language queries in Opal chat. We'll keep it straightforward, no complex integrations, just the essentials you need to get started.

What Every Optimization Team Needs to Understand About Working With AI Whether They Use It for Code or Not

This article is about code generation, but the lessons apply to everything AI does in optimization. The difference between "CSS-only" AI and "almost everything" AI isn't the model you're using. It's the infrastructure you build around it. And that infrastructure—documentation, constraints, institutional knowledge—transforms how teams work with AI on any complex task.

Why "More Data" Won't Make Your AI Smarter

With AI, the temptation to move fast is almost irresistible. You get instant outputs, the thrill of novelty, the satisfaction of "adopting AI" before your competitors. It's a quick high.

But like any quick high, it wears off fast and leads to disappointment. Three months later, you're back where you started—except now you've also eroded team confidence in AI and wasted time on generic outputs that didn't move the business forward.

AI as a Forcing Function for Organizational Maturity

Most enterprise experimentation teams know they lack formalized process. They feel it daily in the endless prioritization debates, the ad-hoc QA checks, the experiments that launch without clear success criteria, the results that spark more questions than answers.

Why We Created "You Are The Analyst" — And What It Teaches About Real-World Analytics

If you're an analyst who's ever felt that nagging doubt when reviewing data that "looked right" but felt wrong, then this game is for you.

The $100,000 Question Nobody Wants to Answer

If your reason for implementing these technologies is "because everyone else is doing it," or "because our board is telling us we need to," or "because my friend at another company is doing it" - my experience has shown me, over and over again, that you're destined to fail.

You Don't Need to Be an Analyst to Think Like One

I need to tell you something that might change how you see yourself: you already have the capacity to be an analytical thinker. You don't need a statistics degree. You don't need to know how to query a database. You don't need to become an analyst.

The Maturity Paradox: Why Small Changes Drive Big Results in Enterprise Optimization

The CRO industry has it backwards. While agencies push "big, bold changes" and platforms promise "30% conversion lifts," the most sophisticated enterprise programs are finding massive value in subtle improvements that inexperienced teams would dismiss as trivial. This isn't about settling for small wins, it's about understanding how human psychology, business maturity, and compound growth actually work at scale.

Welcoming Our Newest ETSU Intern: Wambui Kang'ara

For Wambui, choosing 33 Sticks wasn't just about fulfilling an internship requirement, it was about finding the right environment for meaningful growth. With her experience, she sought a boutique agency with specialized offerings and a reputation for excellence.

"At this stage in my career, joining a boutique agency felt essential, somewhere with a tailored service offering and a reputation for excellence. I wanted more than just 'checking the box' for my master's internship, I wanted an enriching, really focused experience that truly aligned with my goals."

Perhaps most importantly, she was drawn to our people-first philosophy. "Success to me, is about building with integrity and people at the center. That people-first approach made me confident this is the right place to grow and receive invaluable mentorship."

The Danger of Data Overconfidence: Why Curiosity Beats Quick Conclusions

As data practitioners, our ability to spot patterns and extract insights can become our greatest weakness when overconfidence overrides curiosity. This article explores how even experienced analysts can fall into the trap of drawing sweeping conclusions from limited data, missing crucial business context that completely changes the story the numbers tell.

Drawing from real-world examples of analytical missteps—from misreading Adobe Analytics data to celebrating traffic that actually harmed conversions—we examine the warning signs of data overconfidence and provide practical strategies for building a more curious, empathetic approach to analysis.