How to Choose the Right AB Testing Tool: People and Process Matter

A scoring framework for helping select the best A/B testing partner for YOU.

Adobe’s Opt-In Service for Consent Management

Adobe's Opt-In Service has some advantages I don't see folks talk about much:

1. It manages consent for all of your Adobe tools, without requiring you to add conditions to our existing Analytics logic

2. When your existing variable-and-beacon-setting logic runs while the user is opted out, Adobe queues that logic up, holding on to it just in case the user does opt in. Any Adobe logic can run, but no cookies are created and no beacons get fired until Adobe’s opt-in service says it’s ok.

Things I am GRATEFUL for Learning During My Optimization Career

Roughly 15 years ago, I was honored and excited and nervous when I was tapped on the shoulder and asked to run the Optimization program for one of the largest financial institutions in the world. To up the ante a bit and make it even more challenging, no prior Optimization program was in place. So, in addition to driving the strategy, I also had to create the program from scratch.

Is Your Optimization Team GAMIFIED??

Think about how impactful it would be if employees from all levels of your organization – new hires to SVPs – were engaged and submitting test ideas. And, those employees cut across all departments. Your Optimization team will receive so many new, fresh test ideas that have never been thought of before. Also, many of these ideas will be directly tied to business objectives!

Hidden Gems to Unlock the Full Potential and Efficiency of your Analytics Team

One of my favorite parts of the work day is sitting quietly and thinking about strategies for our clients. More specifically, ideas and processes that help our clients become more successful at what they do, and in turn much happier at work. But I can’t accomplish this at the high level that I want to when my environment is messy and distracting.

Minimalization Optimization…Say What??

Simplicity and minimalization are directly tied to consumer psychology. A site experience that overwhelms a shopper is not good for business. Not only are the chances high that they will not purchase a product, there’s also a good chance that they tell others about their experience, because as we all know bad news travels much faster than good news.

Zen and the Art of Optimization

Have you ever read something in a magazine or book, or seen an idea online or on TV, and said to yourself, “That’s a great idea! Why didn’t I think of that??” Or maybe you attended an industry conference recently and heard another company present an idea that seemed so obvious that you couldn’t believe your team is not doing the same thing! Most of us want to be the person bringing in the high impact ideas that move the business needle. And at the same time, we get frustrated when we are not the ones to introduce the idea, and then often think to ourselves, “Why didn’t I think of that?”

Optimization - More Than a Means to an End?

Is Optimization a means to an end or an end in and of itself? Is it good enough to optimize your “null search results” page on your site simply for the sake of making it as functional as possible for your end user? Or are you doing it to improve conversions? Does the former automatically drive the latter? Does your senior leadership ask about conversion and revenue impact for every test and discard the test if there was none?

A Product Recommendations Framework – Where Does My Team Even Start??

You’ve been tasked by your Executive Leadership Team to increase orders and average order value (AOV) over the next 12 months. You have a solid plan in place, as you’ve worked on it for months. Yet there’s something nagging at you, like there could be something important missing in your strategy and you’re not quite sure what is lacking?

10 Lessons From 10 Years in Business: Building the Right Team

Ten years ago, when Hila and i planted the seeds of 33 Sticks, we made a promise. This promise wasn't to ourselves, or investors, or even to the fast-paced analytics marketplace. It was a promise to the world - we would construct a company that consistently delivered incredibly positive experiences to our customers.

Building a Sustainable Analytics Practice

Embracing sustainable analytics practices is a critical step in ensuring long-term success for your organization and a healthier planet. By demanding ethical practices from technology vendors, hiring committed experts, and partnering with responsible agencies, businesses can create a sustainable analytics ecosystem that fosters growth, efficiency, and innovation. It's time to join the movement and make a lasting impact on our world.

Unlock the Hidden Power of Tertiary Metrics: Outperform Primary Metrics in A/B Testing for Breakthrough Results

If 25% of A/B tests run on the site result in a winner, what do we do with the other 75% of the tests that were run on the site that did not produce a winner? I like to call these tests INCONCLUSIVE AT THE PRIMARY METRIC LEVEL. I don’t like the term “losers” at all. Once you call a test a “loser,” your executive team stops listening. Trust me on this one, I see it happen all the time in meetings!

The Dark Side of the Leverage Model in Consulting Agencies: Prioritizing Profit Over People

If you are buying services from an agency, are you aware of the business model they are using?

Most agencies make use of a model called, the leverage model, this model often results in highly negative client experiences, lower quality deliverables, and adverse effects on the mental health of consultants.

The Agency Landscape is Changing: Embracing a Client-Centric Approach

For years, many companies have had negative experiences with agencies. This dissatisfaction can be attributed to a variety of factors, including misaligned billing models, prioritizing growth over client outcomes, and viewing clients as transactional relationships. As the agency landscape changes, it's time for agencies to adapt and embrace a more client-centric approach.

Freeing up your Data Teams so they can Provide Actionable Business Insights

We would like to argue that data-driven test ideas are some of the most impactful test ideas to drive results and help your senior leaders achieve their goals. Testing an idea that has the potential to address checkout funnel fallout and decrease that rate is impactful. That being said, how much time does your data team spend developing and providing these insights?

Simplification and Adopting a "Less is More" Mindset in Digital Analytics

In this final article in the series, we will discuss the second and third concepts of sustainable digital analytics: simplification and adopting a "less is more" mindset. We will explain why it is essential to simplify the current ecosystem and collect less but more actionable data to create greater business impact. We will highlight how a tool-first approach can be detrimental to digital analytics health and provide tips on how to avoid it. Finally, we will discuss the benefits of adopting a "less is more" mindset, which can lead to more valuable data and maintainable MarTech stacks.

The Importance of People-First Approach in Digital Analytics

In this article, we will delve into the first concept of sustainable digital analytics, which is putting people first. We will discuss the Avinash 10/90 rule and why it is essential to understand stakeholders and customers as people. We will explain how data can be used to make people feel better about their jobs and create more positive customer experiences. We will also highlight the importance of prioritizing employee and contractor hires who think sustainably about analytics and partnering with agencies and consulting firms that value quality over quantity.

The State of Digital Analytics and the Need for Sustainability

In this article, we will explore the current state of digital analytics and why there is a need for sustainability in the field. We will discuss the challenges that businesses face due to poor digital analytics health, such as technical debt and decision-making on invalid data. We will explain the concept of sustainability in digital analytics, which involves designing and deploying analytics solutions in a future-proof way that is easy to maintain and emphasizes clean data. Finally, we will outline the benefits of adopting a sustainable approach to digital analytics.

Making Sense of A/B Test Statistics

Let’s use the term Confidence Interval as a case study in how easily it is to use stories to effectively communicate difficult terms.

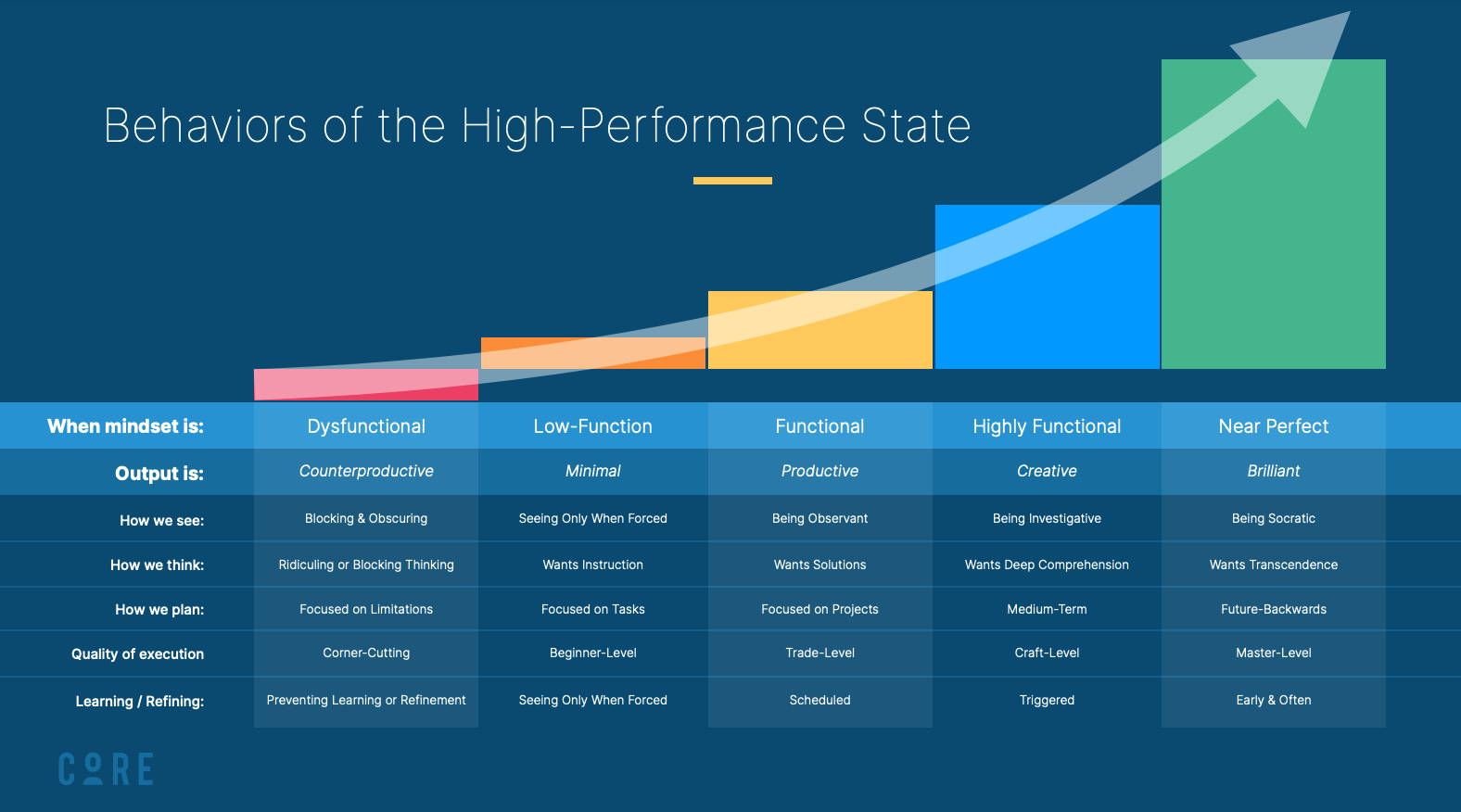

Maximizing Employee Potential: The Importance of Investment in High Performance

Investing in your employees' well-being and professional development is a win-win situation for both the employees and the organization. It can lead to improved productivity, job satisfaction, and company culture, while also helping you attract and retain top talent and gain a competitive advantage in the market.